Your Singularity container nvidia images are ready. Singularity container nvidia are a topic that is being searched for and liked by netizens today. You can Find and Download the Singularity container nvidia files here. Find and Download all royalty-free vectors.

If you’re looking for singularity container nvidia pictures information linked to the singularity container nvidia keyword, you have come to the right site. Our site always provides you with hints for seeking the maximum quality video and image content, please kindly hunt and find more enlightening video content and images that match your interests.

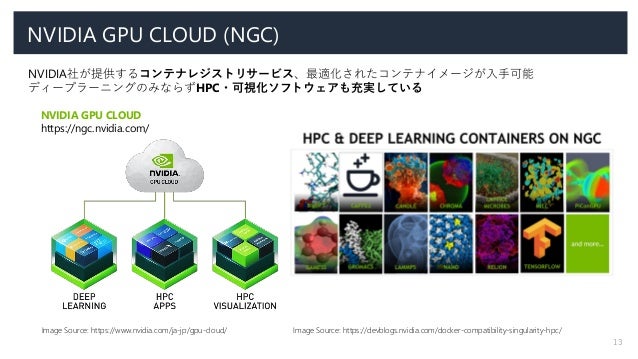

Singularity Container Nvidia. Save the HPL-NVIDIA HPL-AI-NVIDIA NGC container as a local Singularity image file. Since the driver is an older version that CUDA 111 is not compatible with I solved this by using the CUDA 100 cuDNN 7 version of this image instead and then I am able to both compile and run the samples. One of the following NVIDIA GPUs Pascalsm60 Volta sm70 Ampere sm80 x86_64. HPC Containers from NVIDIA GPU Cloud NVIDIA GPU Cloud NGC offers a container registry of Docker images with over 35 HPC HPC visualization deep learning and data analytics.

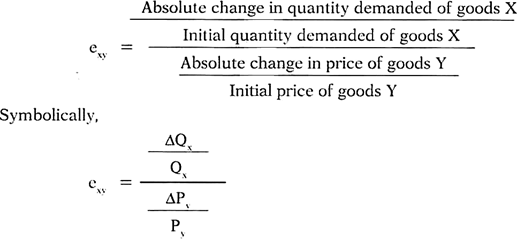

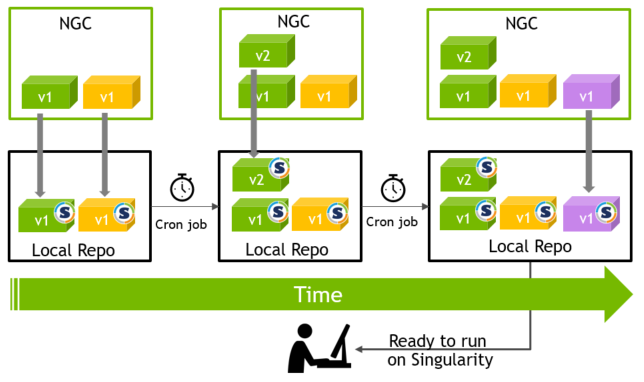

Automating Downloads With Ngc Container Replicator Ready To Run On Singularity Nvidia Developer Blog From developer.nvidia.com

Automating Downloads With Ngc Container Replicator Ready To Run On Singularity Nvidia Developer Blog From developer.nvidia.com

Since the driver is an older version that CUDA 111 is not compatible with I solved this by using the CUDA 100 cuDNN 7 version of this image instead and then I am able to both compile and run the samples. Install Singularity containers as modules on your HPC system exposing custom aliases for entrypoints and interactions like exec run shell and inspect. The shpc library is automatically updated and includes a library of containers from docker hub nvidia biocontainers and more. One of the following CUDA driver versions r460. Docker is not runnable on ALCFs ThetaGPU system for most users but singularity is. After the Singularity container has been started use the Nsight Systems CLI nsys to launch a collection within the Singularity container.

I have been able to build a docker container that is able to run vulkan vulkaninfo gives correct output but when I pull that container to Singularity or re-build it from scratch using a singularity definition file based on the Dockerfile I cannot seem to get vulkan.

The software environment of the container is determined by the contents of the singularity image and what is run within the container will not affect the host. This command saves the container in the current directory as hpc-benchmarks214-hplsif. I have been able to build a docker container that is able to run vulkan vulkaninfo gives correct output but when I pull that container to Singularity or re-build it from scratch using a singularity definition file based on the Dockerfile I cannot seem to get vulkan. The container specification format can be controlled with the –format command line option set to docker to generate a Dockerfile default or singularity to generate a Singularity definition file. CPU with AVX2 instruction support. Designed to be at the edge and an autonomous device while.

Source: developer.nvidia.com

Source: developer.nvidia.com

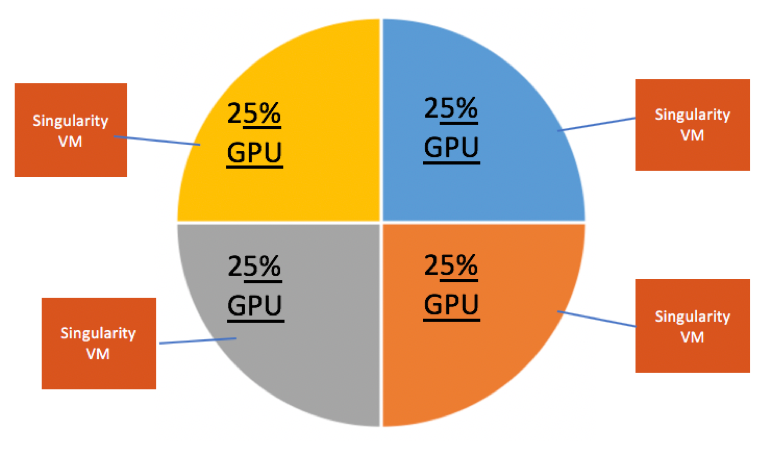

While Docker is prevalent primarily in enterprises Singularity has become ubiquitous in the HPC community. To control which GPUs are used in a Singularity container that is run with –nv you can set SINGULARITYENV_CUDA_VISIBLE_DEVICES before running the container or CUDA_VISIBLE_DEVICES inside the container. Pascalsm60 Voltasm70 or Ampere sm80 NVIDIA GPUs CUDA driver version 460 or 4503606 r4184004 r4403301 arm64. There is an increasing need for Machine Learning applications to leverage GPUs as a mechanism for speeding up the processing of large computations. In this example the base image can be set by the –userarg imageX command line option where X is the base container image name and tag.

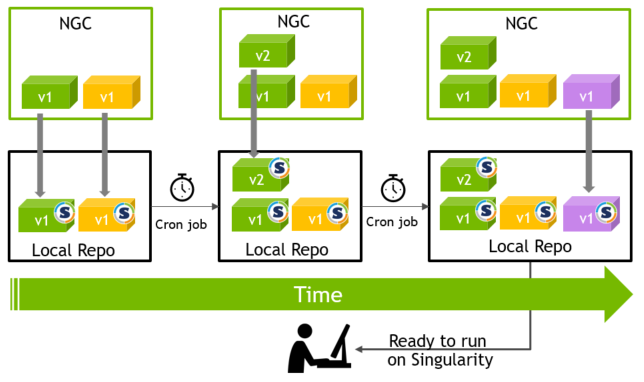

Source: blogs.vmware.com

Source: blogs.vmware.com

To convert one of these images to singularity you can use the following command. Pascalsm60 Voltasm70 or Ampere sm80 NVIDIA GPUs CUDA driver version 460 or 4503606 r4184004 r4403301 arm64. Michael Bauer University of Michigan Lawrence Berkeley National Lab. The container specification format can be controlled with the –format command line option set to docker to generate a Dockerfile default or singularity to generate a Singularity definition file. The NGC NAMD container provides native Singularity runtime support.

Source: alibaba-cloud.medium.com

Source: alibaba-cloud.medium.com

Before running the NGC RELION container please ensure your system meets the following requirements. Example of running an MPI. I have figured out what I did wrong. Docker is not runnable on ALCFs ThetaGPU system for most users but singularity is. Singularity proper will be the best solution if you want to pull and otherwise interact with Docker images.

Source: developer.nvidia.com

Source: developer.nvidia.com

Before running the NGC RELION container please ensure your system meets the following requirements. To control which GPUs are used in a Singularity container that is run with –nv you can set SINGULARITYENV_CUDA_VISIBLE_DEVICES before running the container or CUDA_VISIBLE_DEVICES inside the container. The NGC NAMD container provides native Singularity runtime support. In addition the Singularity runtime is designed to load and run Docker format containers making Singularity one of the most popular container runtimes for HPC. In the above the directive will reference an actual container provided by the module for the version you have chosen to load.

Source: medium.com

Source: medium.com

In the above the directive will reference an actual container provided by the module for the version you have chosen to load. This command saves the container in the current directory as hpc-benchmarks214-hplsif. HPC Containers from NVIDIA GPU Cloud NVIDIA GPU Cloud NGC offers a container registry of Docker images with over 35 HPC HPC visualization deep learning and data analytics. Singularity attempts to make available in the container all the files and devices required for GPU support. One of the following CUDA driver versions r460.

Source: alibaba-cloud.medium.com

Source: alibaba-cloud.medium.com

In the above the directive will reference an actual container provided by the module for the version you have chosen to load. The shpc library is automatically updated and includes a library of containers from docker hub nvidia biocontainers and more. NGC supports both Docker and Singularity container runtimes. However when building the image we can use some knowledge and install for example Nvidia CUDA libraries if we know it will support the system we are targetting. The nvidia-container-runtime explicitly binds the devices into the container dependent on the value of NVIDIA_VISIBLE_DEVICES.

This variable will limit the GPU. Michael Bauer University of Michigan Lawrence Berkeley National Lab. This command saves the container in the current directory as hpc-benchmarks214-hplsif. Docker is not runnable on ALCFs ThetaGPU system for most users but singularity is. The result file can be opened or imported into the Nsight Systems GUI on the host like any other CLI result.

Source: blogs.vmware.com

Source: blogs.vmware.com

In the above the directive will reference an actual container provided by the module for the version you have chosen to load. CPU with AVX2 instruction support. Singularity Container - a fileimage running an operating system on top of the host systems operating system. Singularity HPC Registry using LMOD. Before running the NGC Quantum ESPRESSO container please ensure your system meets the following requirements.

Source: researchgate.net

Source: researchgate.net

This variable will limit the GPU. Save the HPL-NVIDIA HPL-AI-NVIDIA NGC container as a local Singularity image file. One of the following CUDA driver versions r460. One of the following container runtimes. Singularity build tensorflow-2008-tf2-py3simg dockernvcrionvidiatensorflow2008-tf2-py3.

Source: medium.com

Source: medium.com

Designed to be at the edge and an autonomous device while. In the above the directive will reference an actual container provided by the module for the version you have chosen to load. The software environment of the container is determined by the contents of the singularity image and what is run within the container will not affect the host. Singularity attempts to make available in the container all the files and devices required for GPU support. After the Singularity container has been started use the Nsight Systems CLI nsys to launch a collection within the Singularity container.

Source: developer.nvidia.com

Source: developer.nvidia.com

Why Using Singularity Containers to Run TensorFlow on the NVIDIA Jetson Nano. I have figured out what I did wrong. The shpc library is automatically updated and includes a library of containers from docker hub nvidia biocontainers and more. Before running the NGC Quantum ESPRESSO container please ensure your system meets the following requirements. Pascalsm60 Voltasm70 or Ampere sm80 NVIDIA GPUs CUDA driver version 460 or 4503606 r4184004 r4403301 arm64.

Source: medium.com

Source: medium.com

After the Singularity container has been started use the Nsight Systems CLI nsys to launch a collection within the Singularity container. The container specification format can be controlled with the –format command line option set to docker to generate a Dockerfile default or singularity to generate a Singularity definition file. However when building the image we can use some knowledge and install for example Nvidia CUDA libraries if we know it will support the system we are targetting. Important elements above are the use of the –nv option to enable the container to use NVIDIA GPUs and the use of –bind to enable the container to have access to a specific project folder with the same location as on the MeluXina filesystem such that any scripts referencing the path can be used without modification. Anaconda for example installs CUDA runtime package for code that uses it.

Source: developer.nvidia.com

Source: developer.nvidia.com

An environment file in the module folder will also be bound. To convert one of these images to singularity you can use the following command. Youll need to run this command on a Theta login node which has network access thetaloginX. Save the HPL-NVIDIA HPL-AI-NVIDIA NGC container as a local Singularity image file. CPU with AVX2 instruction support.

Youll need to run this command on a Theta login node which has network access thetaloginX. Hi I am trying to run a program that uses vulkan inside of a singularity container for an HPC environment. CPU with AVX2 instruction support. Singularity attempts to make available in the container all the files and devices required for GPU support. However the Nvidia Container Cloud uses a slightly different authentication protocol use of oauthtoken as a username and password as an API token and so this client helps to support those customizations.

Source: slideshare.net

Source: slideshare.net

Singularity build tensorflow-2008-tf2-py3simg dockernvcrionvidiatensorflow2008-tf2-py3. Singularity build OUTPUT_NAME NVIDIA_CONTAINER_LOCATION. I have figured out what I did wrong. Singularity build tensorflow-2008-tf2-py3simg dockernvcrionvidiatensorflow2008-tf2-py3. The software environment of the container is determined by the contents of the singularity image and what is run within the container will not affect the host.

Singularity attempts to make available in the container all the files and devices required for GPU support. The shpc library is automatically updated and includes a library of containers from docker hub nvidia biocontainers and more. Singularity Containers for Reproducible Research. Michael Bauer University of Michigan Lawrence Berkeley National Lab. Youll need to run this command on a Theta login node which has network access thetaloginX.

Source: medium.com

Source: medium.com

Singularity is an open source container engine that is preferred for HPC workloads and has more than a million containers runs per day with a large specialized user base. The driver is provided by the host OS. To control which GPUs are used in a Singularity container that is run with –nv you can set SINGULARITYENV_CUDA_VISIBLE_DEVICES before running the container or CUDA_VISIBLE_DEVICES inside the container. This command saves the container in the current directory as hpc-benchmarks214-hplsif. NGC supports both Docker and Singularity container runtimes.

Source: blogs.vmware.com

Source: blogs.vmware.com

Example of running an MPI. Singularity build tensorflow-2008-tf2-py3simg dockernvcrionvidiatensorflow2008-tf2-py3. HPC Containers from NVIDIA GPU Cloud NVIDIA GPU Cloud NGC offers a container registry of Docker images with over 35 HPC HPC visualization deep learning and data analytics. One of the following CUDA driver versions r460. Since the driver is an older version that CUDA 111 is not compatible with I solved this by using the CUDA 100 cuDNN 7 version of this image instead and then I am able to both compile and run the samples.

This site is an open community for users to do sharing their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site helpful, please support us by sharing this posts to your preference social media accounts like Facebook, Instagram and so on or you can also bookmark this blog page with the title singularity container nvidia by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.